Part of our series of posts by final-year undergraduate students for their Research Comprehension module. Students write blogs inspired by guest lecturers in our Evolutionary Biology and Ecology seminar series in the School of Natural Sciences.

This week, views from Gina McLoughlin and Joanna Mullen on Fiona Doohan’s seminar, “Plant-Microbe interactions – the good, the bad and the ugly”

GM Crops Don’t Kill

Genetically modified (GM) crops, are crops that have been modified using genetic engineering techniques to introduce certain qualities, or traits into a plant where they did not occur naturally. Usually, the genes for the desirable trait are taken from one plant and inserted into the genome of another strain of that plant. However, because of this engineering many people think that GM crops pose a serious health hazard and there seems to be a lot of tension around the topic of GM crops. This tension is mostly stemming from big companies, like Monsanto, that have nasty practice records and design GM crops for patents and profits instead of solving food problems.

However, there are many researchers out there that are working on GM crops to try and solve food problems and ensure there is enough food to feed the world’s growing population. Dr Fiona Doohan is a senior lecturer in the School of Biology and Environmental Science, UCD and she has been doing research on food security. The work that she presented to us in her lecture focused on how to enhance disease resistance in cereal crops. For her research she specifically looked at the disease Fusarium head blight (FHB) in wheat. Wheat is the second largest source of calories, after maize, yet it is produced in only a small percent of the world. FHB is a huge problem for farmers as it causes serious yield loss, which they cannot afford. It cost about €9 million per year in order to control FHB with inconsistent fungicides, so Doohan and her team are looking at a better alternative to protect these crops.

Deoxynivalenol (DON) is a mycotoxin that commonly causes the damage associated with this disease. DON is toxic to humans, animals and plants (Rocha et al., 2005) and it is very important that it doesn’t get into the food chain. DON also aids the spread of the disease in wheat heads and increases the severity of the symptoms of FHB (Bai et al., 2001). It causes bleaching of the wheat heads, alters membrane structures and causes cell death. DON also inhibits seed germination, shoot and root growth, root generation and protein synthesis (Rocha et al., 2005). Some wheat strains are resistant to DON and the genes that cause this resistance are being identified (Walter et al., 2008).

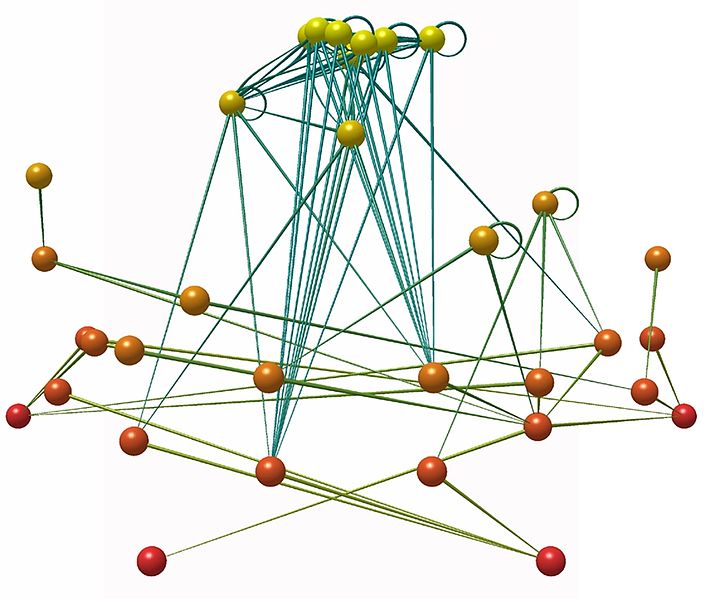

Doohan and her team wanted to look at different strains of wheat and how they reacted to DON. They put two strains of wheat up against each other, Remus and CM82036, to try to find the mechanism that allows DON resistance. Remus is the strain that is used in cultivation because it has more desirable qualities than the CM82036 strain but it is susceptible to DON. Analysis of the results was performed using DDRT-PCR and microarrays and a list of genes that could possibly be involved in DON resistance were obtained. One of the genes in this list was an orphan gene. This is a gene that had no significant homology to any known genes and it has also never been described before. Doohan and her team are doing further research into this orphan gene and hopefully it will be a lead to adding resistance to the Remus strain.

Doohan’s work may be essential to human survival if the population keeps rising and people need to start trusting research and open their minds to GM crops. Research has found that there are no adverse affects to using GM crops and they are no more unsafe than crops modified using conventional improvement techniques. They pose no additional risk to human health or to the environment. GM crops have many benefits that people overlook; they require fewer chemicals to protect them, like pest-resistant cotton. GM crops can also benefit farmers as they are more reliable and resistant to stress. Some farmers are so eager to use these crops they have had to be pirated in, for example Bt cotton was pirated into India. GM crops are also safer and more precise than mutagenesis techniques.

However, I don’t think that this evidence and these benefits are enough to change the negative opinion that society has on GM crops. I think people need to be shown that scientists, like Doohan, are now producing GM crops for the public’s benefit. I am of the opinion that we need to change the negative attitude toward GM crops that the big companies have created and, unfortunately, this may take a very long time and a lot of effort in order to convince the consumers. It makes sense that, in order to feed 9.5 billion people on the land area that we have to grow food, with limited water, pesticides and fertilizer, and with the hugely changing climate, we need to be looking at alternative ways for human survival. Maybe GM crops are the answer.

Author: Gina McLoughlin

————————————————————–

Are we just clutching at straws or is there grain of hope in the battle to save our crops from destruction?

Food, we can’t live without it, we can’t live on it if it’s diseased. This is the motivation behind the work of Fiona Doohan and her team in U.C.D, who are striving to improve the security of the world’s food supply by improving the resistance of cereals such as wheat and barley to the many diseases that currently threaten their very existence. During her seminar on Friday the 22nd of November in Trinity’s Botany Lecture theatre, she outlined the main areas on which her work focuses. At the heart of which is research dedicated to plant disease control and stress resistance, as well as the potential influences of climate change and adaptation to disease.

Currently approximately 2332 million tonnes of cereals are used worldwide each year and they are considered to be a universal staple food in the diet of humans, making Fiona’s research all the more important.

During her talk she discussed one of the main diseases of interest to her group, “Fusarium Head Bight Disease” (FHB). This is a fungal disease affecting crops of Wheat and Barley, causing visible bleaching of the infected cereals early after infection.

The real trouble with this fungus however is that it produces a mycotoxin known as deoxynivalenol (DON) which leads to significantly reduced yields of the crop, and importantly can have toxic effects for animals and humans and as such infected crops are not allowed enter the food market, resulting in significant loss to revenue for farmers.

The big problem when attempting to control and prevent FHB is that fungicides have proven to have little effect on controlling it.

One of the big steps forward in tackling this fungus was the discovery of the significance the role of DON plays in spreading and maintaining the infection. Research has shown that if the DON toxin is knocked out early on the infection will be reduced and the bleaching symptoms do not develop. Also, without the DON toxin, there will be no adverse toxic effects for humans and animals thus removing the food safety concern.

Interestingly not all wheat is susceptible to FHB and DON; some exotic wheats are naturally resistant to the toxin and fungal disease. This has led to researchers asking what are the mechanisms and genes that lead to potential resistance to DON/FHB. Using gene expression studies to isolate possible genes associated with DON resistance, Doohan’s team have discovered several genes which they believe to be of interest, most noticeably one particular orphan gene.

Orphan genes are genes which are restricted to a certain lineage. They are particularly important in stress resistance, but are often ignored. It is on the role this orphan gene plays in DON resistance that Doohan’s team have centred their research efforts. Noting the importance not to tissue specificity per say but it’s specificity to DON, the orphan gene will not have an effect on mutant strains of DON. However, when artificially expressed in a non-exotic strain of wheat that would normally express DON when infected, the orphan gene has been shown to inhibit the expression of DON thus inhibiting the development of FHB.

Though these results are very encouraging, the true significance of this discovery and whether it can be applied practically to the production of crops is still waiting to be tested. Unfortunately due to strict European laws the first tests on genetically modified, field planted crops are likely to have to take place in the U.S.A. However Doohan’s research does offer a glimmer of hope in the rather bleak fungus covered problem FHB and it’s (g)rain of terror on stalks of wheat all over Europe.

Author: Joanna Mullen

Image Source: Wikimedia commons